Grocery Sales Forecasting

Background

A successful supply chain is central and recurring concept in modern retail inventory management. Inventory Managers must ensure that right amount of each of the sku are available in stock and customer orders are accurately fulfilled without hitting an inventory miss. Knowing the demand of each of skus and the schedule of such planned orders is critical to good inventory management. This emphasizes the necessity for supply chain forecasting.

Forecasting therefore has many benefits or advantages in a fast moving retail store scenario.

- Better Plan Products with a short shelf life and there by avoid wastage and improve operating margin.

- Better Plan for Products with longer lead time to receiving those products/skus.

- Better Planneed Inventory in a grocery store is key to far more lucrative and healthy business in the long run.

Objective

Grocery sales forecast use case allows one to predict forecast at a product/sku level and is the basis for time and resource planning for purchase managers/store managers.

Relevance of Xceed

Xceed Analytics provides a single integrated data and AI platform that reduces friction in bring data and building machine models rapidly. It further empowers everyone including Citizen Data Engineers/Scientist to bring data together and build and delivery data and ml usecases rapidly. It's Low code/No code visual designer and model builder can be leveraged to bridge the gap and expand the availability of key data science and engineering skills.

This usecase showcases how to create, train/test and deploy a Grocery sales prediction regression model. The datasets were collected from Kaggle.These include holidays events dataset,oil dataset, grocery sales dataset,stores dataset and transactions dataset. Xceed will provide a NO-CODE environment for the end-to-end implementation of this project, starting with the uploading of datasets from numerous sources to the deployment of the model at the end point. All of these steps are built using Visual Workflow Designer, from analyzing the data to constructing a model and deploying it.

Data Requirements

A grocery store's forecast demonstration here relies on a variety of data points including stores sales data, transaction data. We will be using the following datasets for this usecase.

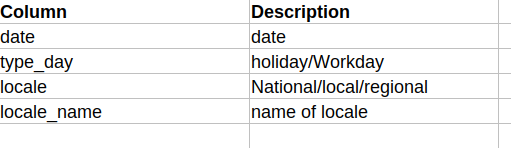

- holiday events dataset : contains information on public holidays

- oil dataset : contains information about the price of oil by date

- grocery sales dataset: contains information about grocery sales by date

- stores dataset: contains grocery store information by location

- transactions dataset: contains the transactions information by store and date

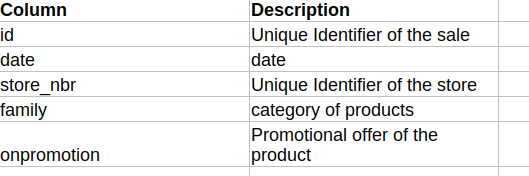

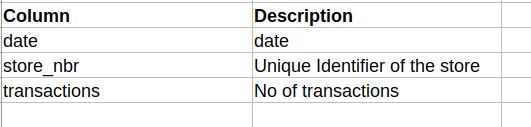

Columns of interest in the dataset :

Holiday Events Dataset:

Grocery Sales Dataset

Transactions Dataset

Model Objectives

Understanding trends in grocery sales over time and forecasting sales by analysing the underlying data, constructing a regression machine learning model, and implementing it after defining the model's major features.

Steps followed to develop and deploy the model

- Upload the data to Xceed Analytics and create a dataset

- Create the Workflow for the experiment

- Perform initial exploration of data columns.

- Perform Cleanup and Tranform operations

- Build/Train a regression Model

- Review the model output and Evaluate the model

- Improve on the metrics which will be useful for the productionizing

- Deploy/Publish the model

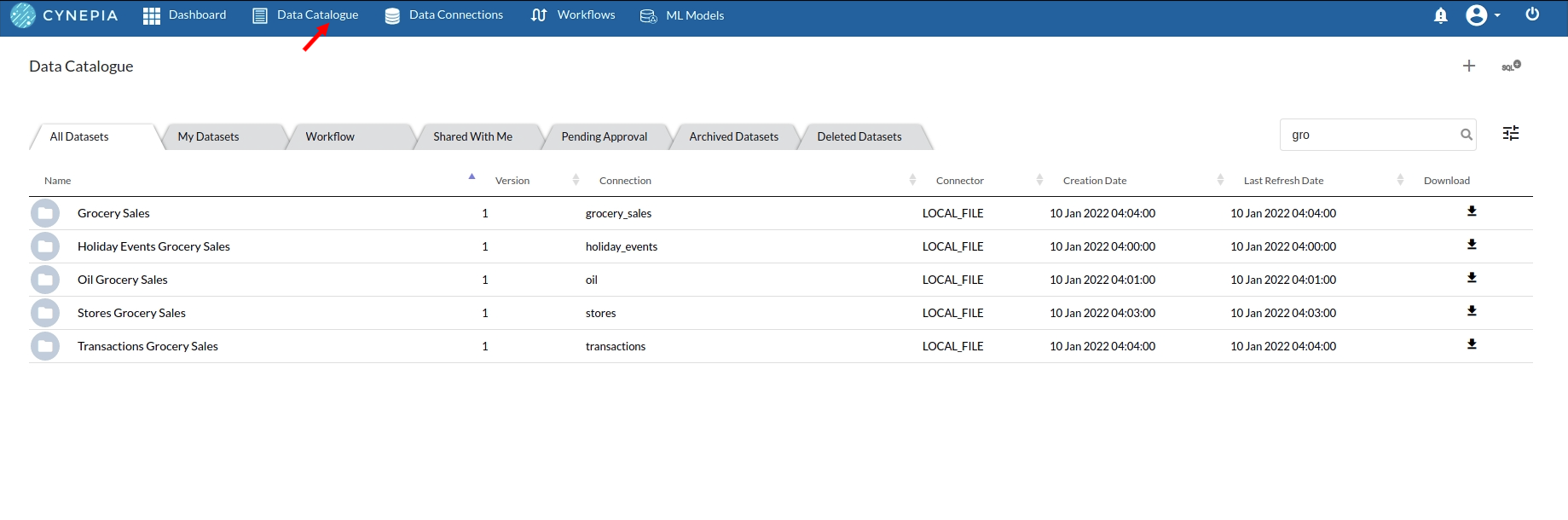

Upload the data to Xceed Analytcs and Create the dataset

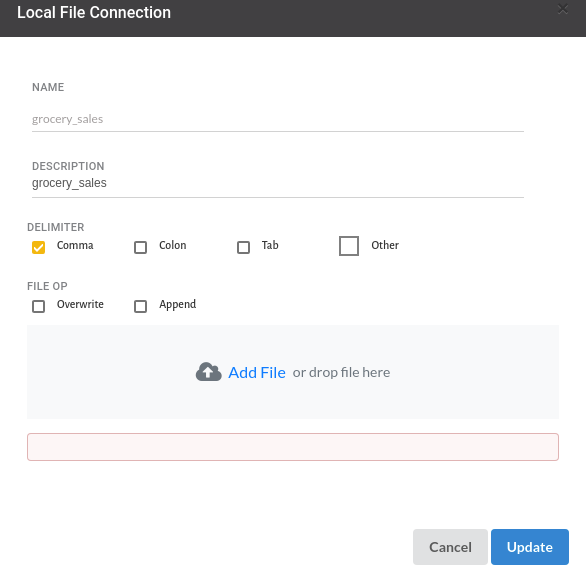

- From the Data Connections Page, upload the three datasets to Xceed Analytics: crop, rainfall, and temperature. For more information on Data Connections refer to Data Connectors

- Create a dataset for each dataset from the uploaded datasource in the data catalogue. Refer to Data Catalogue for more information on how to generate a dataset.

Create the Workflow for the experiment

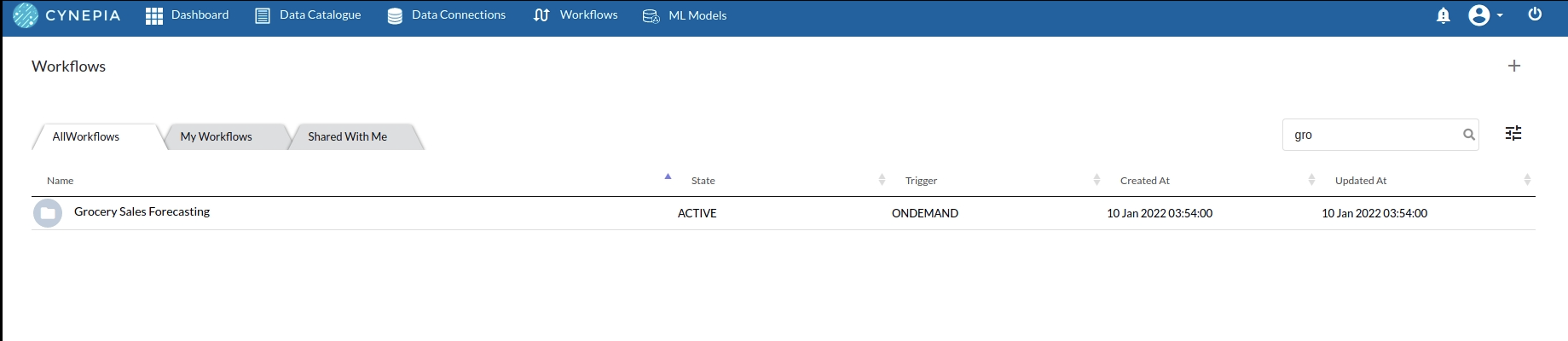

- Lets Create our Workflow by going to the Workflows Tab in the Navigation. Create Workflow has more information on how to create a workflow.

- We'll see an entry on the workflow's page listing our workflow once it's been created.

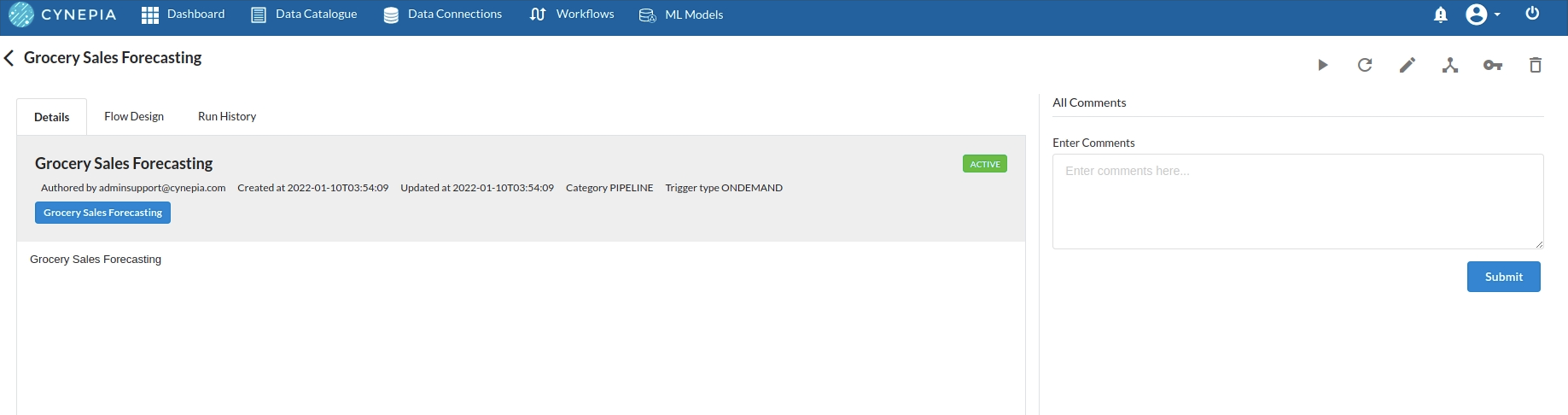

- To navigate to the workflow Details Page, double-click on the Workflow List Item and then click Design Workflow. Visit the Workflow Designer Main Pagefor additional information.

- By clicking on '+,' you can add the Input Dataset to the step view. The input step will be added to the Step View.

Perform initial exploration of data columns.

- Examine the output view with Header Profile, paying special attention to the column datatypes. Refer to Output Window for more information about the output window.

- Column Statistics Tab (Refer to Column Statistics for more details on individual KPI)

Perform Cleanup and Transform Operations

- Before we can build our model, we need to perform a few cleanup modifications.

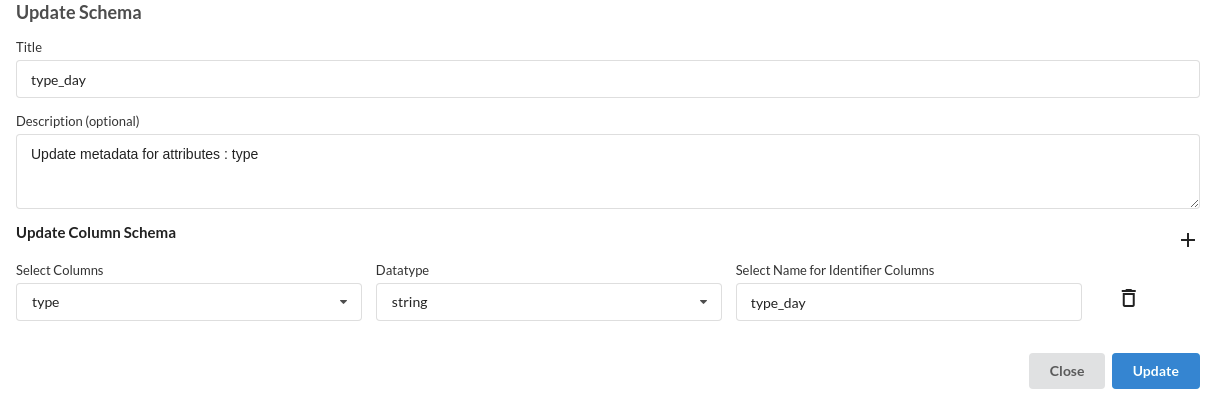

- Under the Holiday Events Dataset, we update the datatype of day column. The processor used for this step is Update Schema

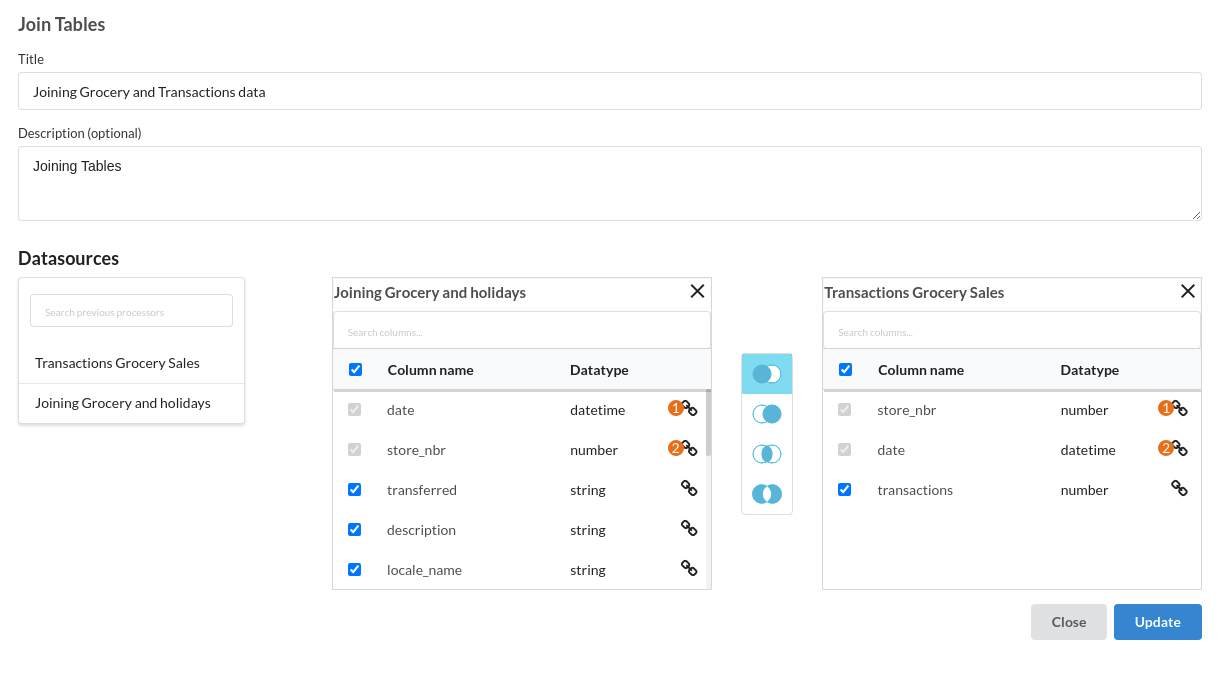

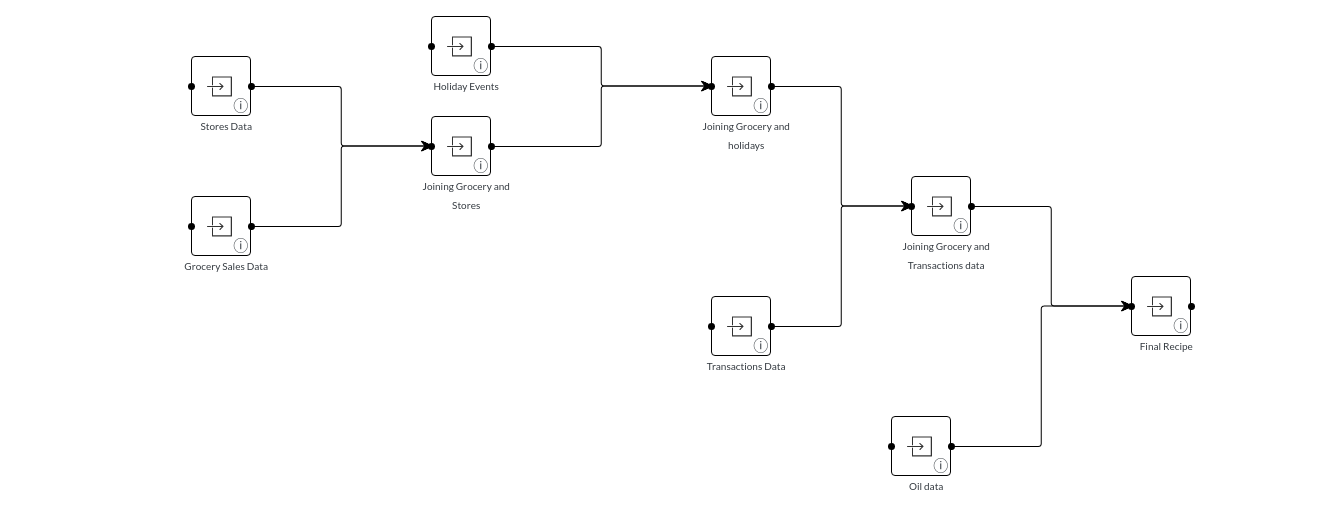

- Join all the datasets to grocery sales dataset one by one. The processor used for this step is Join tables

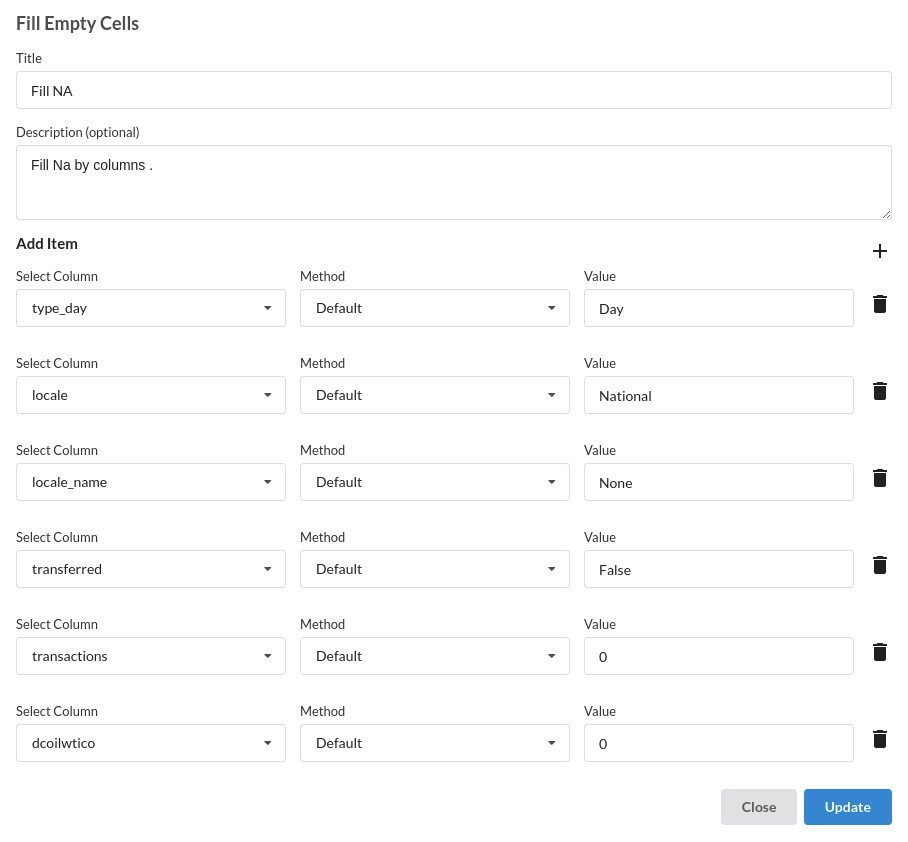

- Fill Null Values of Columns . the processor used in this step is Fill empty cells

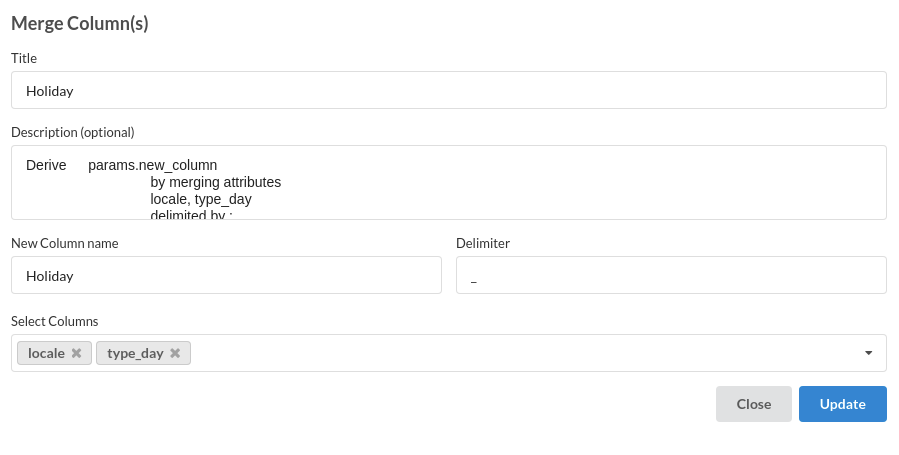

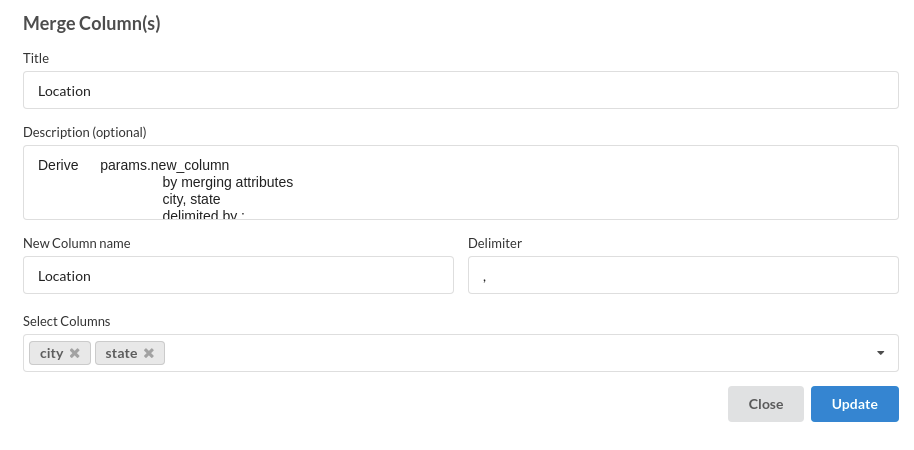

- Merge Columns to get Holiday and Location Column . The processor used for this step is Merge columns

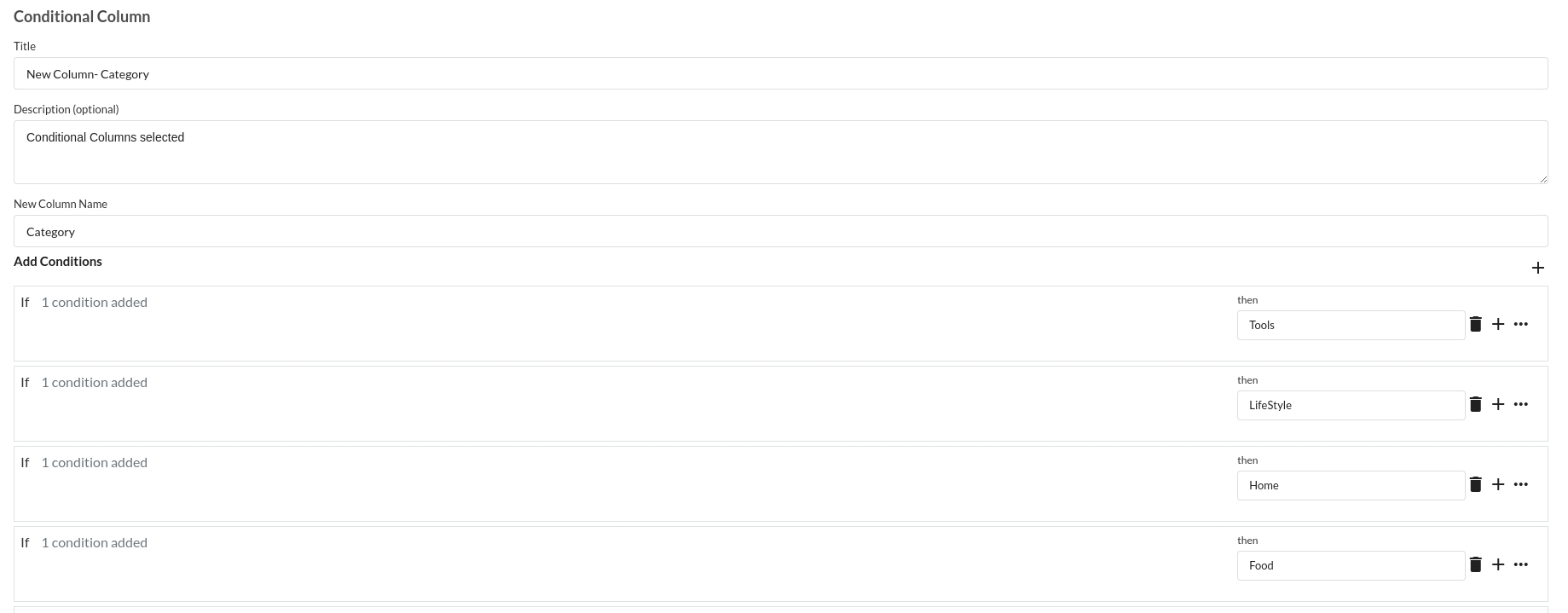

- Add a new Conditional Column Category. The processor used for this step is conditional columns

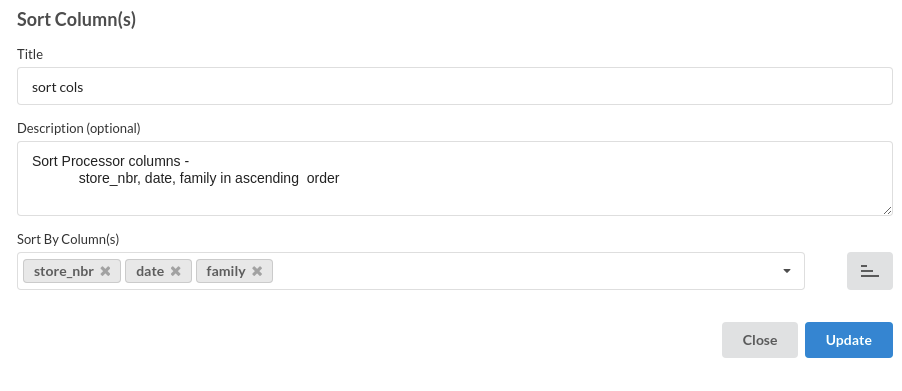

- Sort Columns in Ascending order. The processor used for this step is Sort columns

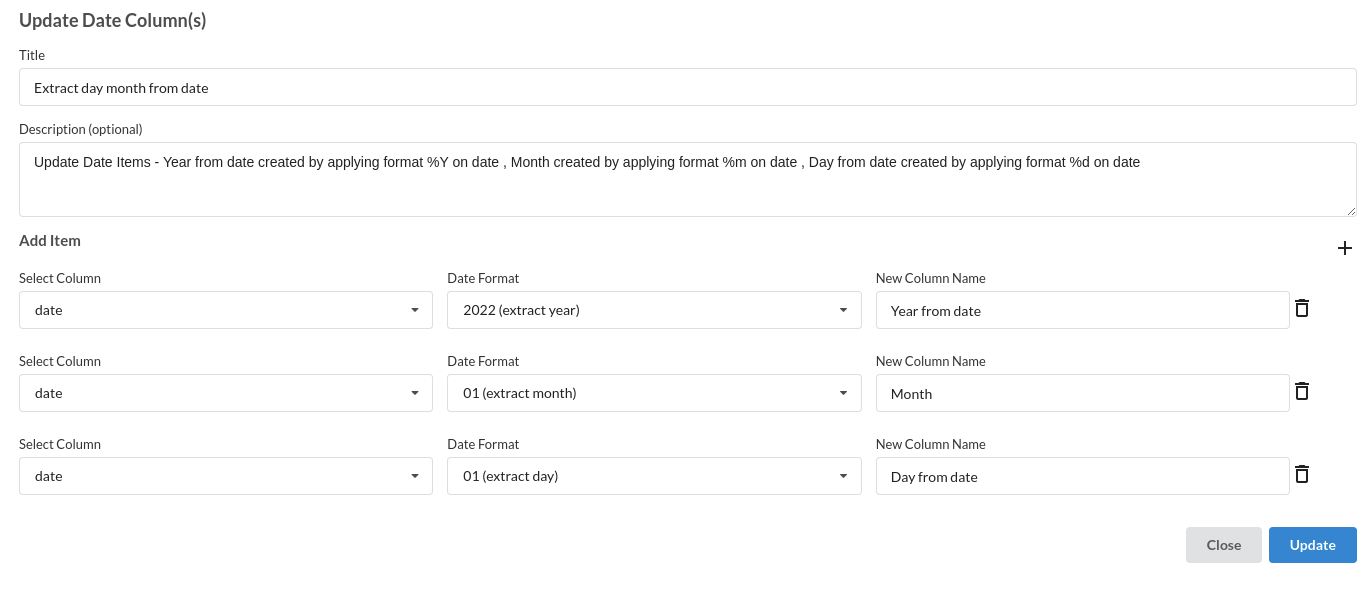

- Rename Columns : day , month and year from date . The processor used for this step is Update Schema

- Final DesignerFlow looks like the below figure.For more information refer to Flow view

Build/Train a regression Model

- We now have a dataset to work with in order to create our regression model. Some of the actions we take before developing a model are listed below. 1.Feature Selection 2.Feature Encoding 3.Choose the algorithm and train the model.

1.Feature Selection

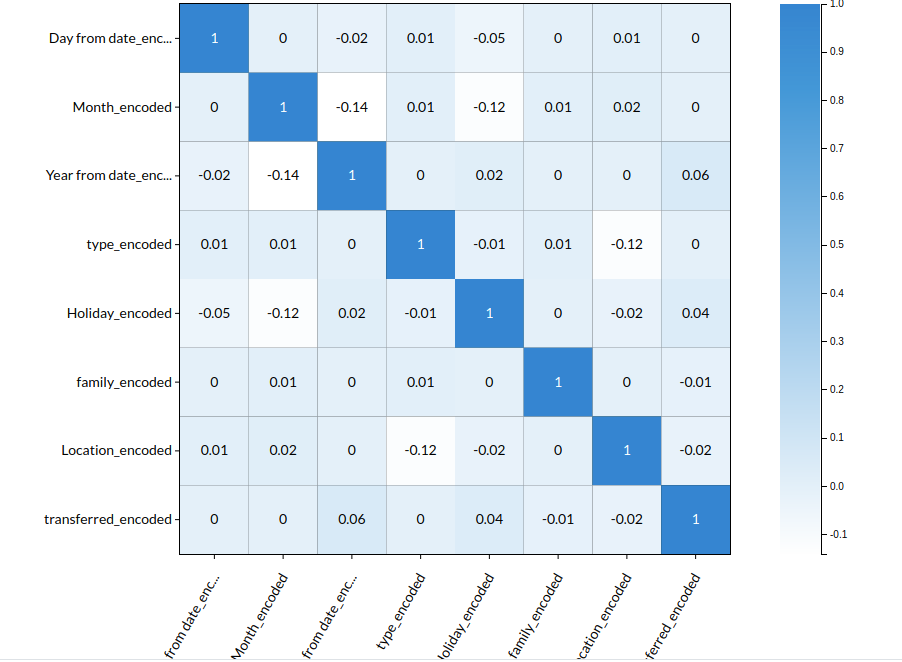

- Go to the Column Profile View and select Multi-variate profile to construct a correlation matrix to manually identify the features of interest. The peason correlation is shown by Xceed Analytics. Select all of the columns that are strongly correlating to the target feature. for more information, refer to Column Statistics

- Some of the features we chose that can explain our target variable based on the observed correlation are:

- Day

- Month

- Year

- Holiday

- Type

- Location etc

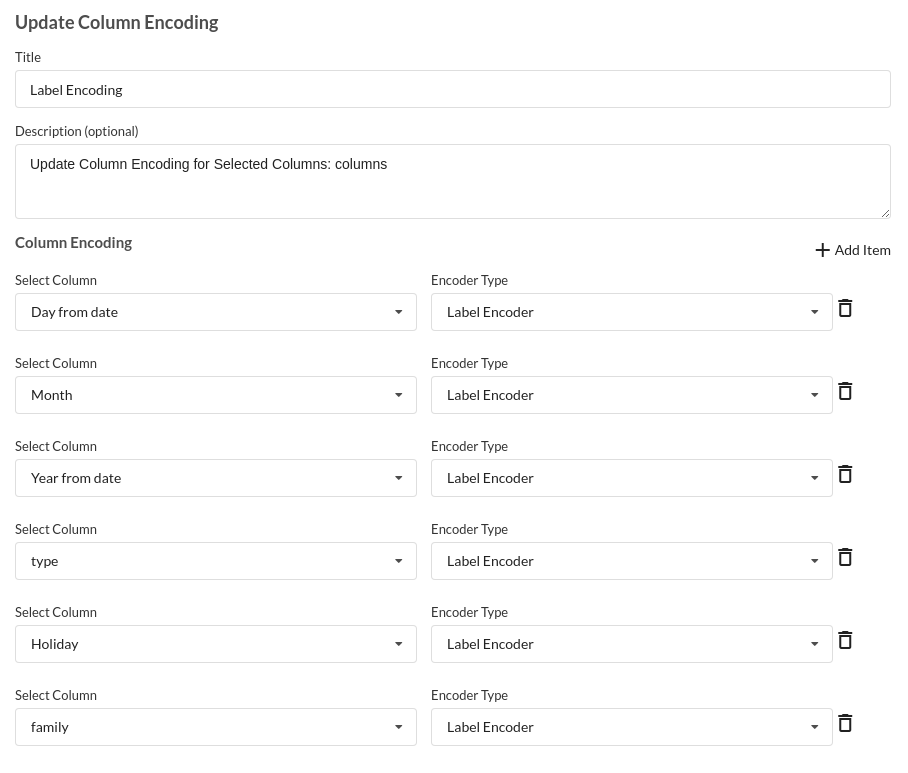

2.Feature Encoding

- Take all of the categorical columns and encode them based on the frequency with which they occur. For more information, refer to Feature encoding

3.Choose the algorithm and train the model.

- Because we're estimating a continuous variable- production for the prediction model. From the Transformer View, select Regression(auto pilot) and put in the relevant information. Refer to Regression (autopilot) for more information on model parameters (autopilot)

Review the model output and Evaluate the model

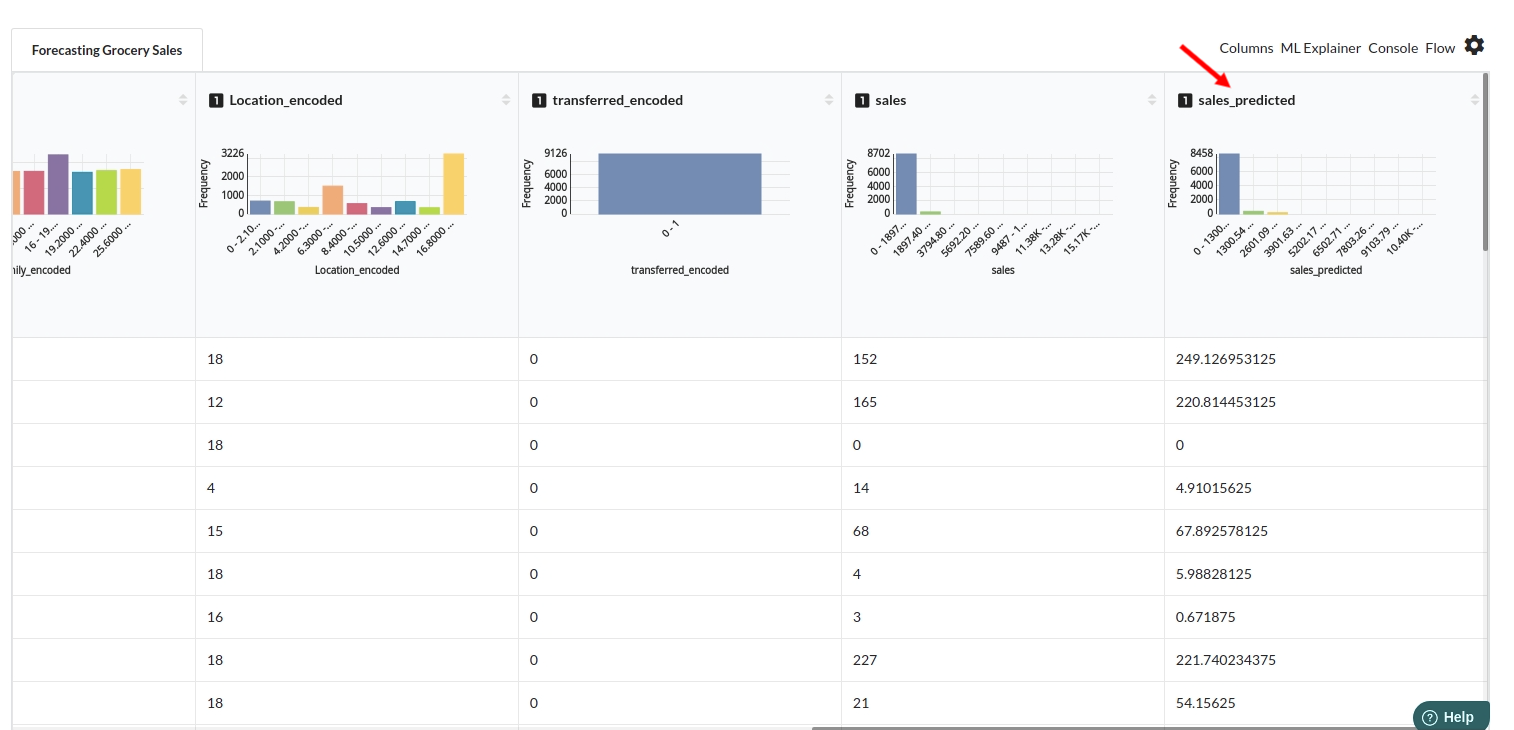

After you finish building the model, it is time to review the model output. Look at the output window to first review your predicted results .Since this is a regression problem you will get a new column in the view like the one below.

When you finish building your model you will see another tab in the view called Ml explainer . Click on that to evaluate your model.

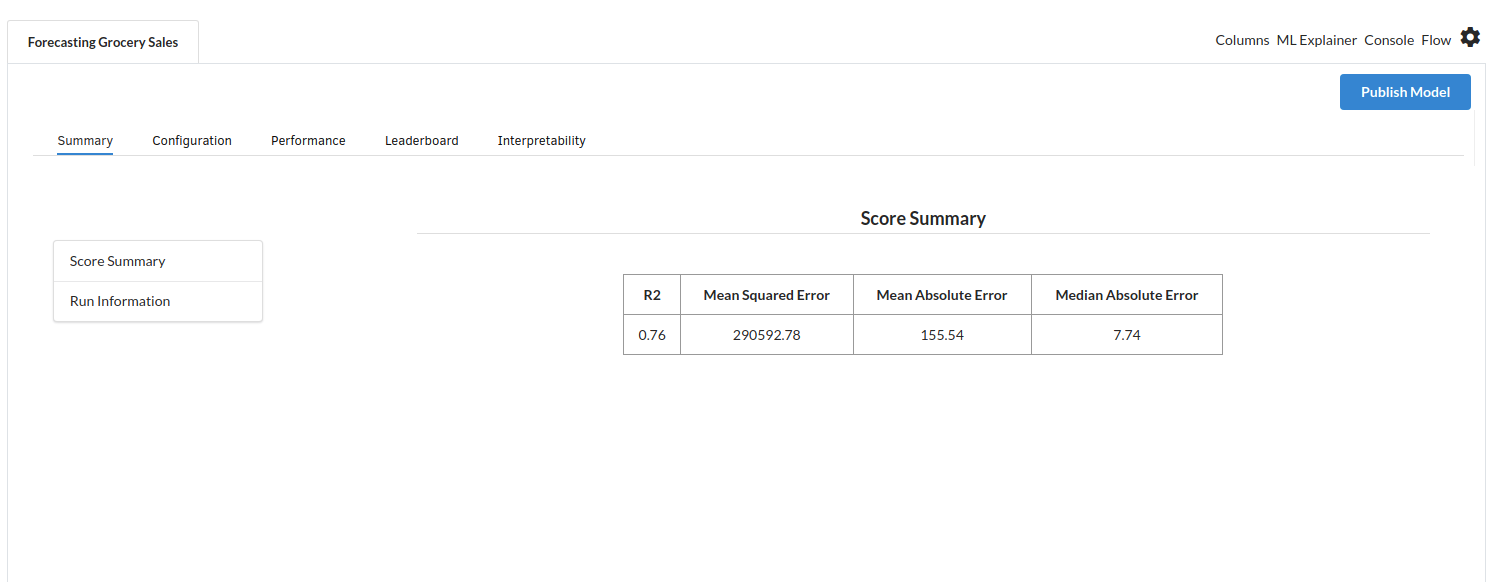

- The first view you see when you click on ML explainer is the Summary view

Look at the metrics score and the Run summary stats. Based on your calculations decide if the R2, mean Sqaured Error and Mean Absolute Error are according to your expecation. if not this will be your first step to rethink the training process.

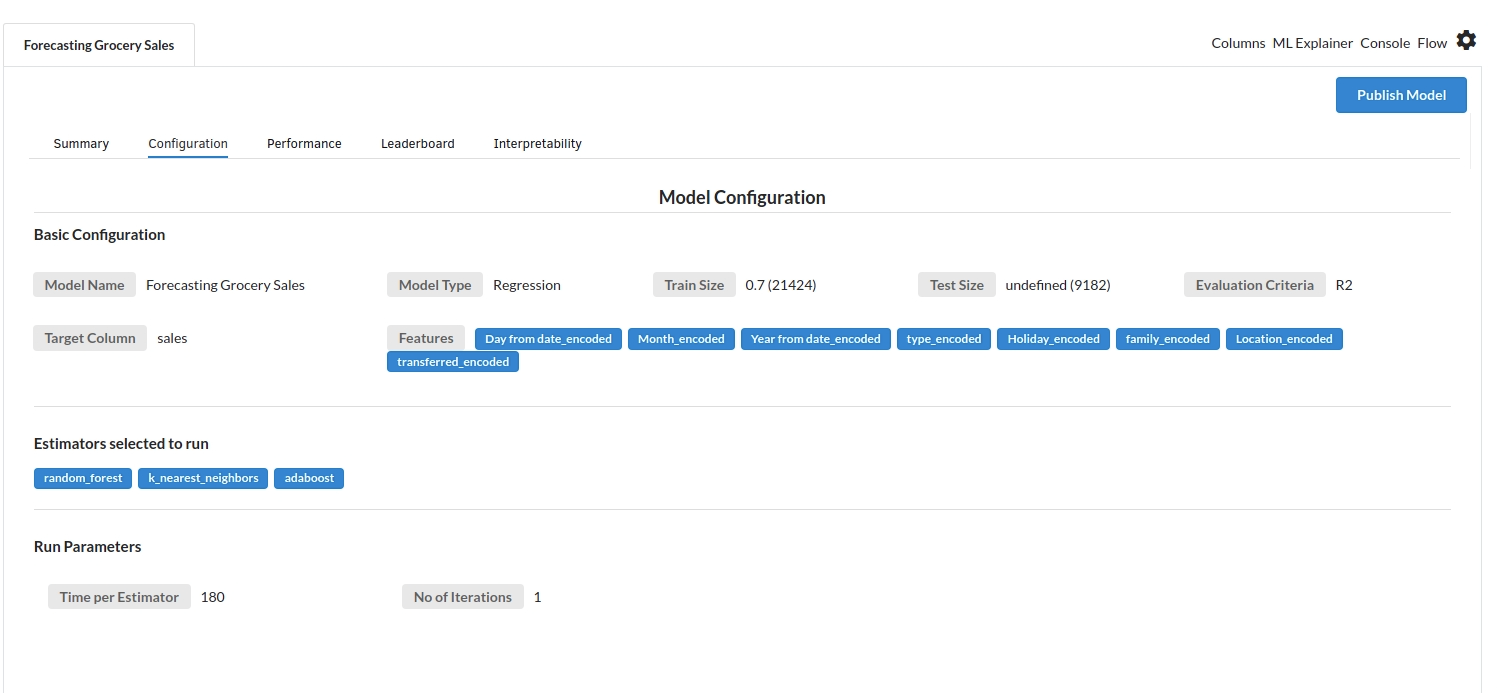

- The second view under Ml explainer is configuration view

The configuration view will give you the information about the step you filled in the Regression step . The view would look like the one below.

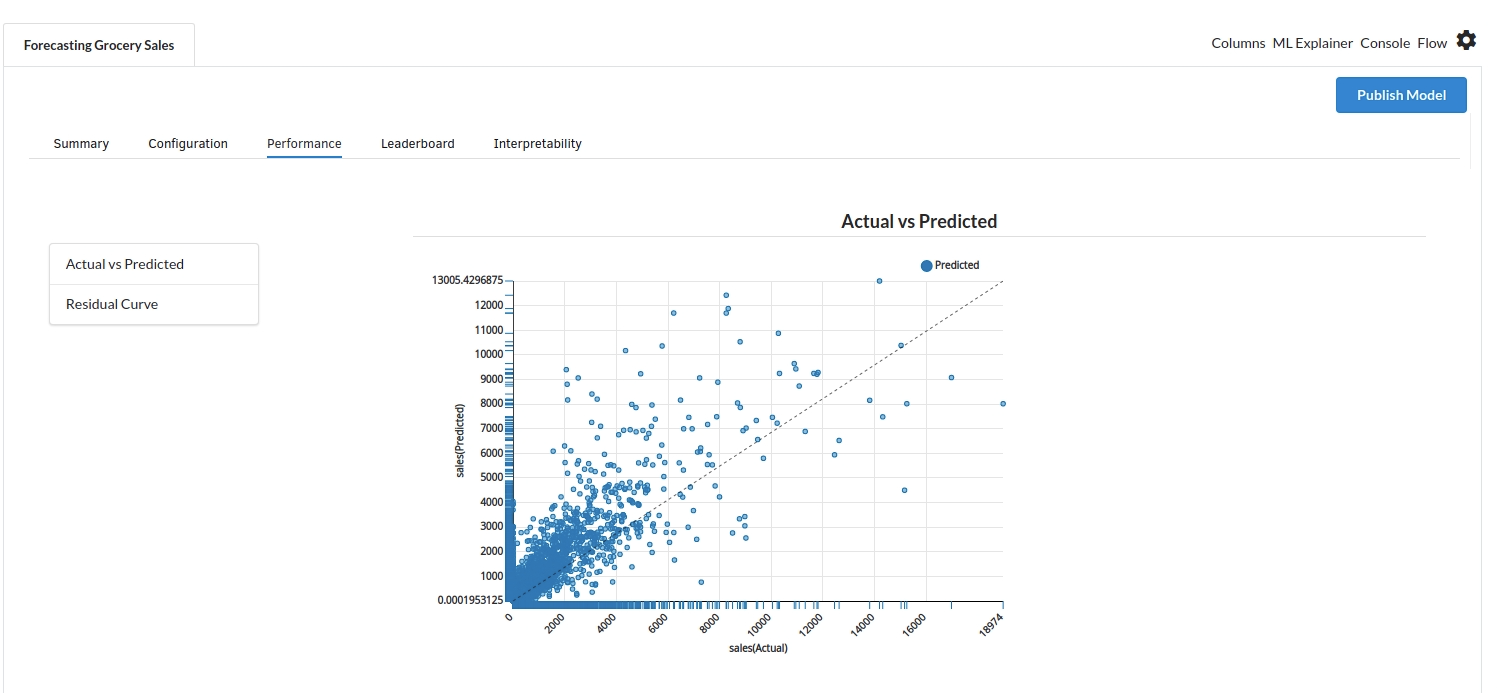

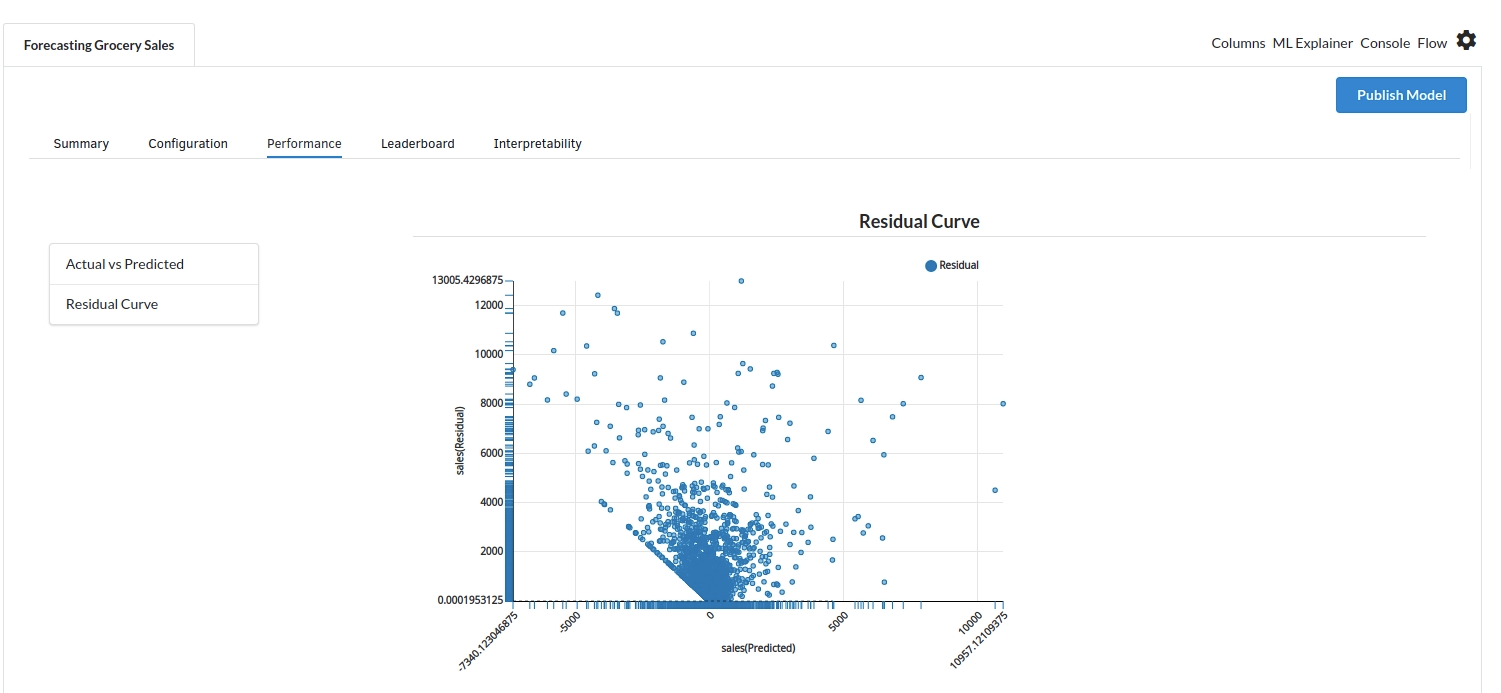

- The third view under Ml explainer is Performance View . You can see the actual vs predicted and the residual curve charts for regression. Look at the built charts and decide if the charts are good enough for your model. The actual vs predicted chart is a good indicator to understand how well your model was trained .

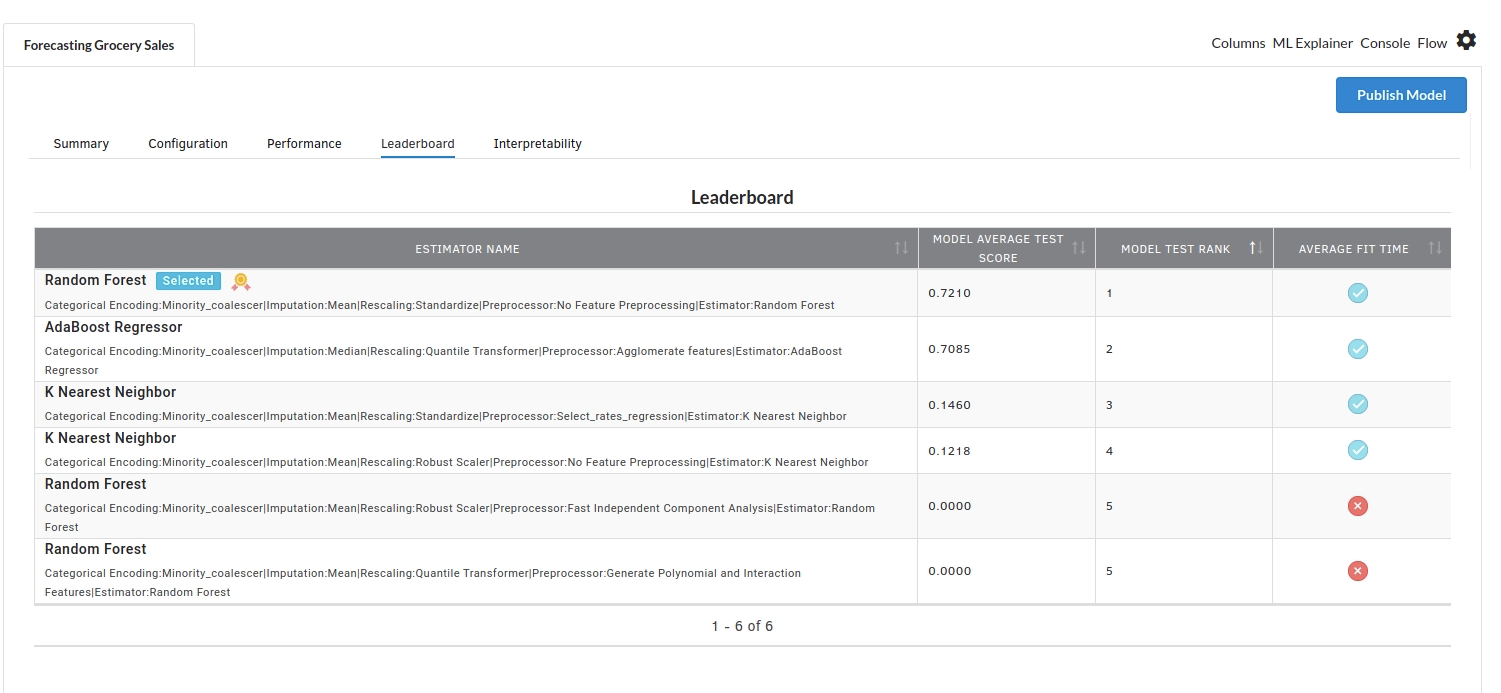

- The fourth view under Ml explainer is Leaderboard . In this view you can see the number of algorithms trained and all the feature engineering done on the algorithms used with ranking system to rank the best algorithm trained.

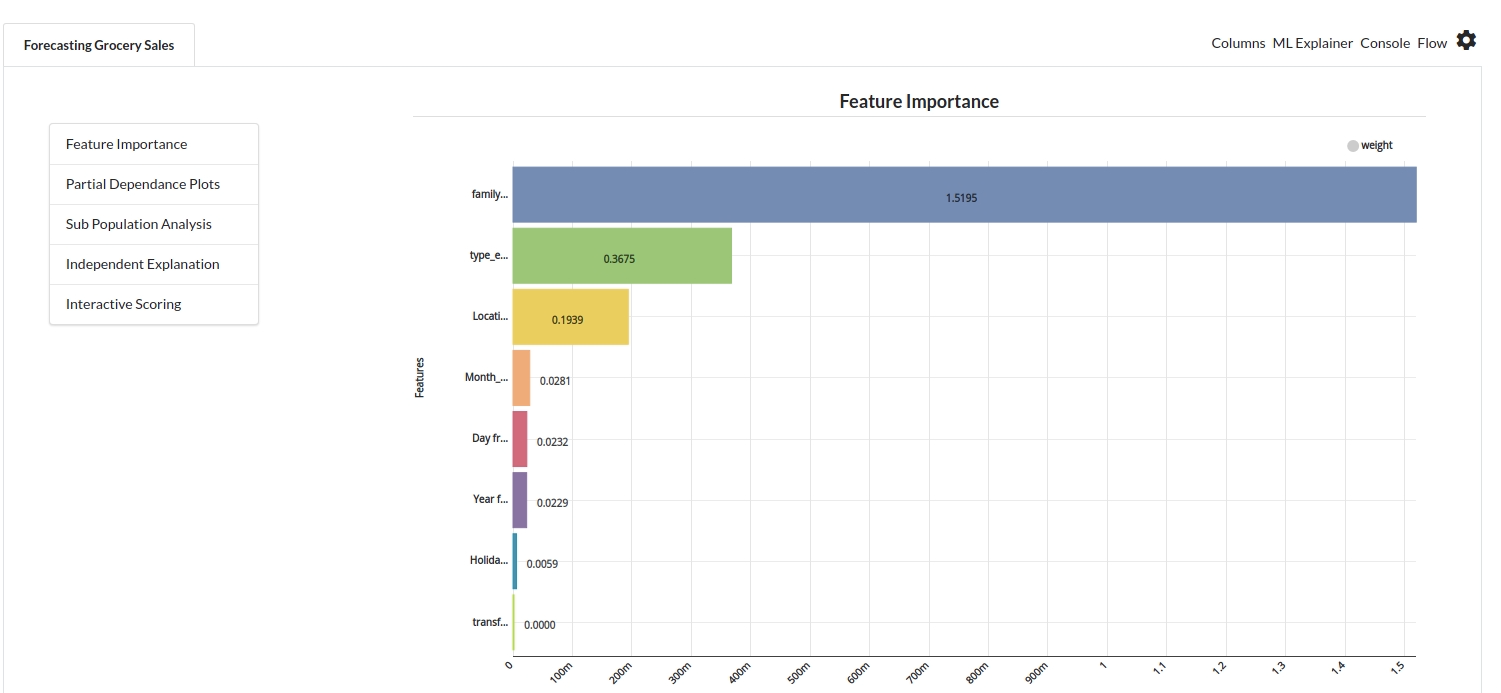

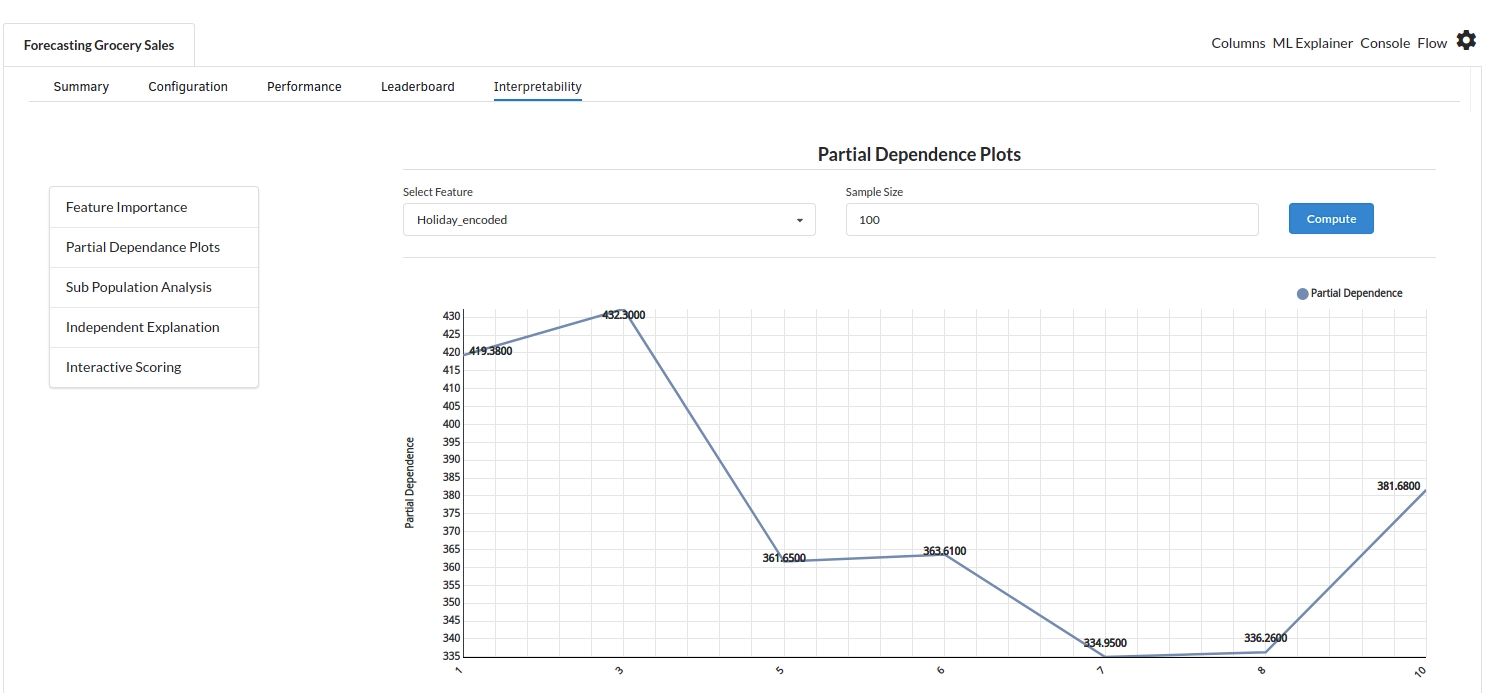

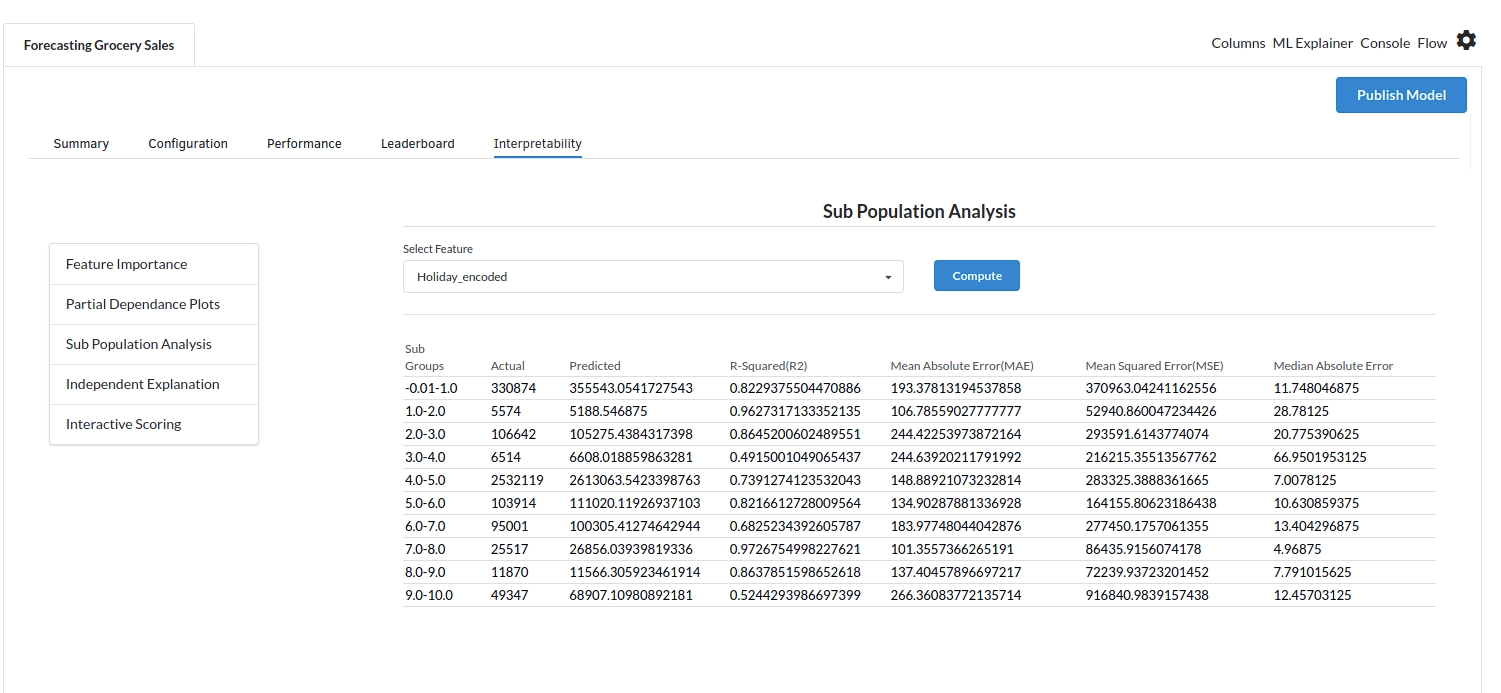

- The last view you see under ML explainer is Interpretability . In this view you will be able to interpret your model in simple terms where you will be getting results pertaining to feature importance , PDP Plots , Sub Population Analysis , Independant Explanation , Interactive Scoring . for more infomation on these results , refer to Interpretability . The Interpretability tab and the results under this tab would look like the one below.